Is Your Language Assessment Data Accurate and Reliable?

The value of language skills is increasing.

Both learners and educators are discovering that individuals who can demonstrate proficiency in more than one language improve their chances to earn college admission, secure a good job, and increase their earning potential. Assessment is the most efficient means of determining proficiency.

When you rely on a language proficiency assessment, how do you know its results are accurate and reliable? It turns out, not all assessments are created equal.

Why language assessment accuracy and reliability matter.

Assessment data and proficiency outcomes are often the basis for:

- Language program quality ratings

- Decisions about program funding

- Staff hiring and promotions

- Credentials such as State and Global Seals of Biliteracy

- College credit

- Progress of individual learners

Regardless of what assessment is used, it is essential for language learners and their trusted programs to be confident that the scores they receive are accurate and reliable. When various assessments are all testing the same skills, what makes them different? Or, what makes one better than the other?

Common practices within a program, or even within the language teaching field, may agree upon an assessment practice and find its results suitable. However, the assessments may not be meeting certain rating accuracy and reliability requirements. If an inaccurate thermometer indicates you have a fever but you do not, you may end up taking medication for the wrong diagnosis.

Accuracy and reliability matter when they can be decisive in awarding a credential for language skills, a company’s decision to hire, or whether a program gets funded or not.

How can you tell if scores are accurate and reliable?

Recent Avant research on the rating of the Writing and Speaking sections of the Avant STAMP assessments demonstrates how Avant applies rigorous standards and rating quality checks to achieve a high degree of accuracy and reliability across all of the 40+ languages that Avant tests. The research examined the following components:

- Rater training

- The rating process, using human raters and the procedures when two raters disagree on a rating

- How the final score is determined

- The following statistical measurements:

- Exact Agreement

- Exact + Adjacent Agreement

- Quadratic weighted kapp (QWK)

- Standardized Mean Difference (SMD)

- Spearman’s Rank-Order Correlation (p)

- 2 STAMP Levels Apart (a measure of non-adjacent agreement)

These measures can be triangulated to ensure the highest possible degree of accuracy and reliability in the Avant STAMP results.

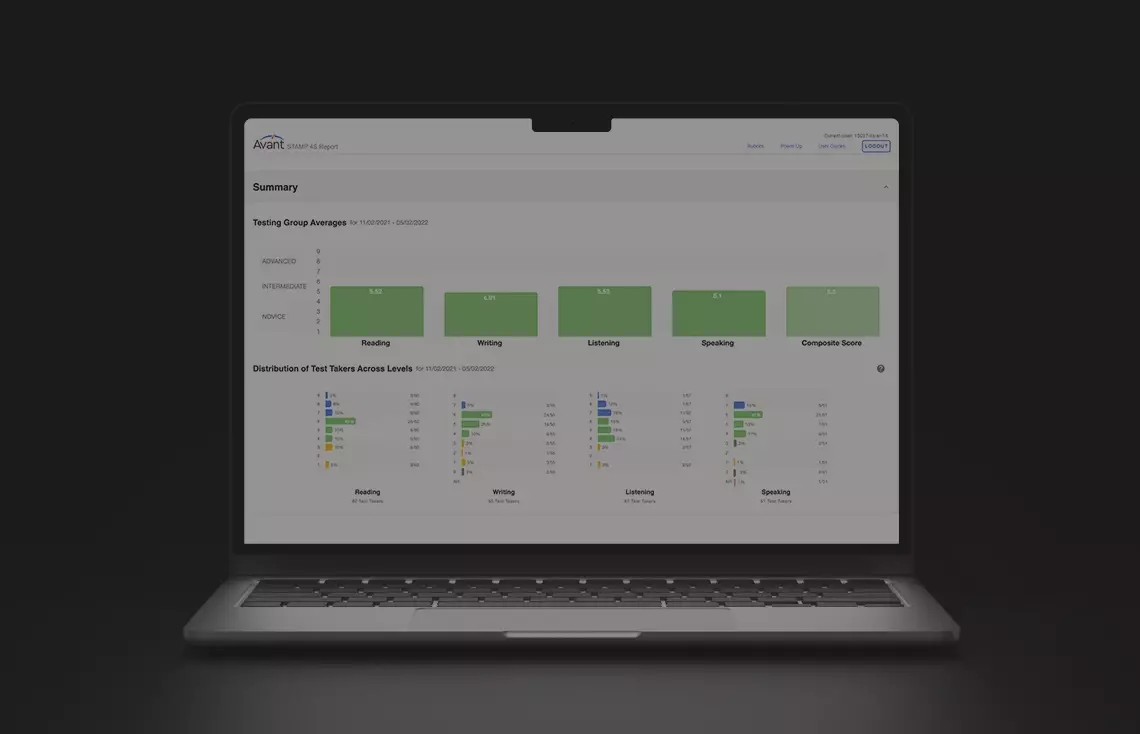

Results show that across all levels, the rating of Avant STAMP 4S and STAMP WS Writing and Speaking responses is highly consistent. The American Council on Education (ACE) has conducted an extensive review of Avant’s rating processes, accuracy and reliability. Based on their review, ACE recommends Avant STAMP for college credit. For more statistical detail about the accuracy and reliability of Avant’s rating of Speaking and Writing responses, read the full white paper on the accuracy and reliability of Avant’s rating of STAMP Speaking and Writing responses.

Verifying the accuracy and reliability of rating in a language proficiency test is of critical importance when evaluating whether the test is appropriate for your program. As the stakes rise for testing and documenting language skills the question is: can you afford not to?

Articles you may also like:

Discover the Potential of Your Language Programs with Avant STAMP DataData is a Key to Language ProficiencyTest Reliability and Validity