What is Score Scaling?

When test developers report test scores to end-users (school administrators, teachers, parents, the test-takers themselves, or other potential score users), it is important that the meaning of the numerical scores reported be clear and easy to use. If not, what is the point of test scores?

Test scores can come in many different flavors.

For example, the scores in some tests are reported in terms of number correct or percentage correct. Such reporting is useful in cases when every single test taker takes exactly the same test, as in the case of linear, fixed-form tests.

The Avant STAMP (STAndards-based Measurement of Proficiency) assessments, however, make use of more modern psychometric and test development approaches and are not linear tests. All STAMP Reading and Listening tests are computer-adaptive, meaning that the difficulty of the test adapts in real time to the estimated language ability of each test-taker. This results in more accurate measurement of test-takers’ language proficiency and provides for a more pleasant experience for test-takers than is normally the case with a linear test, since test-takers will not come across a large number of items substantially below or above their actual level of proficiency. This powerful test assembly and deployment approach is only possible by means of a psychometric technique called item-response-theory (IRT). In IRT, every single test item (a.k.a. test question) is associated with its own scientifically-measured difficulty level. In the case of STAMP, the difficulty level of each item on the test is calculated through an IRT analysis of the responses of hundreds (and in many cases, thousands) of representative test-takers. This allows us to calibrate the items in terms of their difficulty and to make sure that only the very best items are used in each STAMP test.

The STAMP scoring algorithm also makes use of this item difficulty information in order to calculate each test-taker’s final STAMP level, based on which items they attempted during the test, their response to each item, and the ability that test-takers need to demonstrate in order to score at each of the STAMP levels (the latter is determined through a process called standard setting). Therefore, given the adaptive nature of the STAMP assessments and given that each item has a certain statistical level of difficulty associated with it, reporting the STAMP scores in terms of number correct (e.g., 23 out of 30) or percentage correct (76.6%) is neither meaningful nor appropriate.

As we will soon discuss, item-response theory (IRT), which forms the statistical basis of development and scoring of the Avant STAMP tests, uses a score scale that is not very intuitive for end-users of the STAMP tests. For example, the IRT scale has both negative and positive values. Telling a test taker on their score report that their reading proficiency in the German STAMP 4S test is -1.4 would not be helpful and would violate the requirement for clear and easy-to-use scores discussed above. For this reason, it is necessary that the STAMP score values based on IRT be converted to a more meaningful and easily interpretable score scale. A scale is basically a spectrum of potential measurement values and test developers have to decide on the reference points of the score scale before the scores can be reported.

Understanding a Scale’s Reference Points

Three scales that readers are likely familiar with are the Celsius, Fahrenheit, and Kelvin temperature scales. Although all three are temperature scales, their points of reference and interpretation differ substantially. The same can apply to different scales used for reporting language proficiency scores.

In the Celsius scale, a degree of 0 ℃ indicates the measurement point at which water freezes at sea level, whereas the minimum possible measurement value on the Celsius scale is -273.15 ℃, which is the point at which there is no molecular activity whatsoever in a substance. On the Fahrenheit scale, however, the measurement point at which water freezes at sea level is 32 degrees F, not 0 degrees F. On the Fahrenheit scale, – 459.67 F indicates the minimum possible measurement value, when there is no molecular activity in a substance. As we can see, in neither the Celsius nor the Fahrenheit scales, does a zero actually mean complete absence of something. It’s simply a reference point that only makes sense with regard to the complete scale and its possible, attainable values.

For the temperature scales, the only scale that has a true zero point is the Kelvin scale. In the Kelvin scale, the 0 K measurement point actually means no molecular activity at all, with zero marking the minimum possible value in the Kelvin scale. In the Kelvin scale, therefore, negative values are not possible, differently from the Celsius and Fahrenheit scales (and as we will shortly see, the IRT scale). All three temperature scales have no real limit to their maximum values, since there is no known limit to how hot something can be.

Now, can we really say that one scale is better than the other? Not really. All three scales are perfectly valid on their own and are widely used in different contexts, with certain scales being deemed more appropriate by users depending on specific contexts. One thing that unites these three scales, however, and which makes them perfectly suitable for precise measurement, is the fact that the distance between any two measurement points in the scale indicates the same difference in temperature. In other words, the difference in molecular activity between 35 ℃ and 37 ℃ is exactly the same as that between 89 ℃ and 91 ℃. This is a characteristic that we at Avant believe is at the heart of good measurement, and certainly one that we use for our STAMP scores.

Despite the usefulness of looking at the three familiar temperature scales above and seeing how appropriate they are in their given contexts for the measurement of a construct such as temperature, it is important to understand that some of the characteristics they possess make them inappropriate for the measurement of a construct such as language proficiency. For example, one would find it virtually impossible to explain what negative language proficiency means or how someone can have zero ability in a language; even a person who has never studied or been in contact with a given language previously will have some (albeit minimum) knowledge of at least borrowed words in that language. No language proficiency test can claim that someone has zero language proficiency, since it would be impossible for a given test to assess all possible scenarios in which a person may show some, even if very basic, understanding of a word or phrase in the language. All language tests are bound by the items present in the test and what they are capable of measuring, which means that language tests may not have a zero point of measurement, but may have a minimum point of measurement, representing the point below which the test is unable to make any claims. The same applies to the maximum point of reference in a test; no matter how many items a test contains, it will never be able to measure all the language proficiency of an individual. As such, a valid scale for a language proficiency test such as the STAMP tests will have a minimum reference point (used for test takers who get all test items they saw incorrectly), no zero reference point, and will have a maximum point of reference (used for test takers who answer all test items they saw correctly).

IRT Measurement and the STAMP Scores

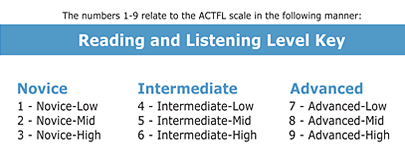

As noted above, it is important that equal intervals in a scale used for reporting scores on a language proficiency test indicate the same difference in language proficiency. All levels from the STAMP test (levels 1 – 9) are aligned to the ACTFL Proficiency levels (Novice Low through Advanced High), as can be seen below:

Despite the alignment of the STAMP levels to the ACTFL proficiency levels and despite the usefulness of the ACTFL proficiency levels for indicating a test taker’s general level of ability in the language, the ACTFL levels themselves do not conform to the type of numerical scaled scores we are looking for. Firstly, the meaning of the interval difference in the ACTFL (and therefore STAMP) levels is not the same regardless of the point on the scale. For example, it takes a higher amount of language ability to move from Intermediate High (STAMP level 6) to Advanced Low (STAMP level 7) than it does to move from Novice High (STAMP level 3) to Intermediate Low (STAMP level 4). For this very reason, the proficiency levels are depicted as an inverted pyramid, and not as a square or rectangle. Secondly, despite the usefulness of the proficiency levels for indicating where a certain language learner stands in terms of their language proficiency, students scoring at the same STAMP level may actually have slightly different abilities in the language and may have answered different numbers of items correctly in the STAMP test, even if they happened to have seen exactly the same items through the STAMP adaptive algorithm. Therefore, despite the important usefulness of the STAMP and ACTFL levels in understanding test-takers’ language proficiency, these levels are not as fine-grained as some end-users of our test scores would like them to be.

For instance, a school may only have ten seats in a special honors section of French Reading. What if fourteen of the students have reached a STAMP level 9 in Reading? How can the school pick 10 out of the 14 students for the honors class? Randomly picking ten may be deemed an acceptable solution but we at Avant Assessment can provide a better and more accurate way of helping in this case. As mentioned above, Avant Assessment makes use of a statistical measurement technique called Item Response Theory to calibrate all of the items in the Reading and Listening sections of the (adaptive) STAMP tests, to align the number of questions a test-taker gets right in their specific test path to the STAMP levels and therefore ACTFL levels they are aligned to, and to finally, produce scaled scores that provide score-users with a more fine-grained measure of the language ability of each test taker than would be possible if only the STAMP levels were to be reported.

Scaling the STAMP Scores

Once all items in a specific section of a STAMP test have been calibrated through IRT, we are able to assign an IRT ability estimate (also referred to as theta in IRT terminology) to each student based on the items they got right or wrong in the specific path they followed in each of the Reading and Listening sections of their STAMP test. Once we have this value, we are then able to scale this value (hence the term, score scaling) so that we can report more fine-grained scores, in order to supplement the reporting of the STAMP level achieved. By scaling the IRT scores, we are then able to ensure all scaled scores are positive (no negative values) and that score users, such as the hypothetical French school above, are able to zoom in more on students’ proficiency, even if students happen to have scored at the same STAMP level.

Each of the Reading and Listening sections of each STAMP test must be scaled separately. Therefore, scaled scores for Spanish Reading cannot be compared directly with scaled scores for Spanish Listening, or with scaled scores for Chinese Reading. In other words, the STAMP scaled scores are language and section specific.

We scale the IRT scores in the Reading or Listening sections of each of our tests through a simple linear transformation, seen in the formula below:

The scaling above ensures that all possible scaled scores for a given section of a STAMP test are positive numbers without decimals, which are much more intuitive than scores ranging from – 4 to + 4, which are more typical of IRT. The linear scaling seen in the formula above also ensures that the distance between any two scaled scores indicates the same difference in ability at any point in the scale.

Interpretation of the Scaled Scores

Imagine we have the following students, who took the Listening section of the Japanese STAMP 4S test:

- Student A scaled score: 589

- Student B scaled score: 612

- Student C scaled score: 677

- Student D scaled score: 700

The difference in Japanese listening proficiency between Student A and Student B in Japanese (23 scaled points) is the same as the difference in Japanese listening proficiency between Student C and Student D (23 points). If two students achieved the same STAMP level in Japanese Listening (e.g., STAMP level 4 – Intermediate Low), but one of them had a scaled score that is 20 points above the other, we have strong support to believe that the student with the higher scaled score is more proficient than the student with the lower scaled score. The larger the difference between their scaled scores, the more confident we can be that the difference is meaningful and that the two students indeed are not equally proficient. The scaled scores can also be useful in cases where a student may seem to not be making progress after a year of study and be “stuck” at the same proficiency level. A comparison between their scaled score one year ago and their scaled score from the current administration may show small incremental gains in their proficiency, even if such increments were not sufficient to move them into the next STAMP level.

One thing should be kept in mind, however: all assessments have a certain margin of measurement error associated with their scores. For example, the standard error of measurement (SEM) reported by ETS for the Listening section of the TOEFL iBT, which uses a score scale ranging from 0 – 30 is 2.38 scaled points (Educational Testing Services, 2018). In turn, for the SAT section scores, with a score range of 200 – 800, the standard error of measurement is 30 points (College Board, 2018). Since it is not feasible to assess each student on many different days, and across hundreds of test items, every test result is a snapshot of the level a given test taker was able to sustain on that specific day that they took the test, and across the specific items they answered during their test administration. Naturally, a test such as the STAMP 4S, whose Reading and Listening sections are computer-adaptive, which includes a large number of items targeting each test-taker’s estimated level in real time, and which is developed to strict qualitative and quantitative standards, will tend to have a smaller error of measurement and be more effective and efficient than shorter, non-adaptive, linear tests that do not follow the same rigor (Schultz, Whitney, & Zickar, 2014).The average standard error of measurement for the scaled scores in the Reading and Listening sections of the STAMP tests is 10 scaled-score points. This statistic is easily derived from the type of IRT software we employ at Avant.

The error of measurement associated with the STAMP scaled scores is quite small given the psychometric rigor and adaptive nature of our tests. Although we advise that test score analyses be performed primarily based on the STAMP level achieved, we at Avant suggest that scaled scores may be considered in very specific cases when higher-stake decisions are to be made based on STAMP test scores, such as when the STAMP scores are used to award State Seals of Biliteracy (SSB) or to award credit by exam (CBE). In such higher-stakes cases, if a test-taker’s scaled score in Reading or Listening happens to be within 10 points or less of the minimum scaled score that could qualify them for either the SSB or CBE, Avant’s position is that a school or district may, at their discretion, have such test-takers retake the STAMP test (given its adaptive nature, there is a good chance test takers will not see exactly the same items as in the previous administration). If on this second administration the test taker’s scaled score leads to a STAMP level that meets the requirements for either the SSB or CBE, Avant’s position is that the scores from this second administration may be used in place of the scores from the first administration.

The two scenarios discussed above are higher-stakes scenarios in which consideration of the test’s small margin or error may be warranted (remember that all tests have a margin of error).

We recommend that it is generally appropriate to use the STAMP scaled scores for traditional uses such as for ongoing yearly analysis or students’ growth and for program evaluation.

To see the tables of scaled scores currently available for the STAMP assessments, click here.

References:

College Board (2018). SAT: Understanding Scores. Retrieved from https://collegereadiness.collegeboard.org/pdf/understanding-sat-scores.pdf

Educational Testing Services (2018). Reliability and Comparability of TOEFL iBT Scores. TOEFL Research Insight Series (vol. 3). Retrieved from www.ets.org/s/toefl/pdf/toefl_ibt_research_s1v3.pdf

Schultz, K. S., Whitney, D. J., & Zickar, M. J. (2014). Measurement Theory in action. Case studies and exercises (2nd ed.). London/New York: Routledge. College Board (2018). SAT: Understanding Scores. Retrieved from https://collegereadiness.collegeboard.org/pdf/understanding-sat-scores.pdf

Educational Testing Services (2018). Reliability and Comparability of TOEFL iBT Scores. TOEFL Research Insight Series (vol. 3). Retrieved from www.ets.org/s/toefl/pdf/toefl_ibt_research_s1v3.pdf

Schultz, K. S., Whitney, D. J., & Zickar, M. J. (2014). Measurement Theory in action. Case studies and exercises (2nd ed.). London/New York: Routledge.